startups

First they pushed back. Now they want clarity.

For months, startup founders have been begging the federal government to consider their interests as it crafts guardrails for artificial intelligence in health care, and to ensure that regulation — intended to prevent pitfalls like algorithmic bias — doesn't inhibit new technology. As Washington forges ahead, founders and investors tell me there's still a lot of confusion about what'll be regulated and how, and they're urging policymakers to issue more specific guidance as soon as possible. I discussed the issue with several prominent experts, including Abridge's Zachary Lipton, Y Combinator's Surbhi Sarna, Andreessen Horowitz's Julie Yoo, and the Consumer Technology Association's René Quashie. Read more here.

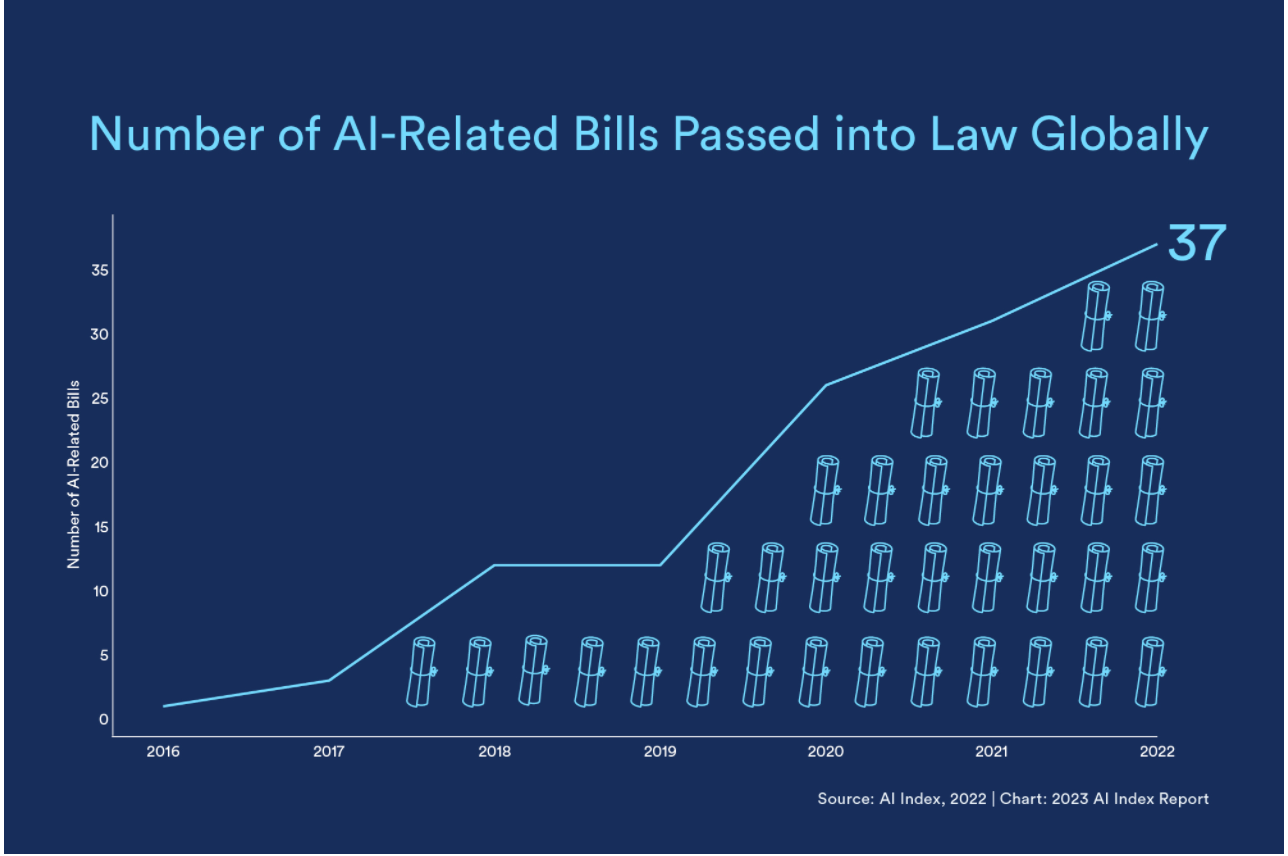

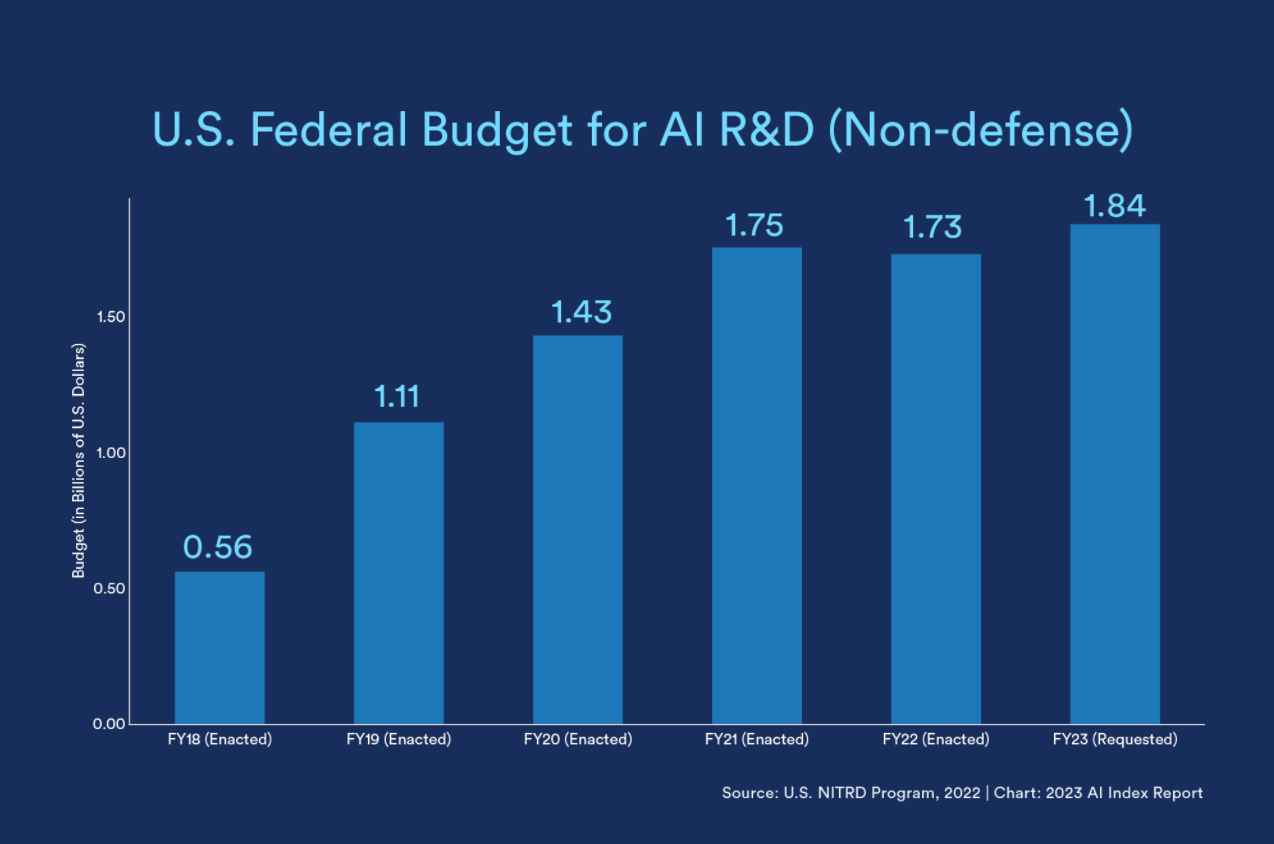

(The above chart from Stanford's Institute for Human-Centered Artificial Intelligence last year captures global regulatory uptick. And U.S. federal budget dedicated to AI R&D has also climbed, as seen below.)

In the meantime, there's a lot that didn't make it into that story that I'd still like to share. For instance, I chatted with Lipton, who spoke at a Senate AI forum last year, about the somewhat amorphous concept of "red-teaming" — a so-called "adversarial testing" protocol often cited by tech founders as a key defense against AI's fallibility or misuse. In general, they're talking about a process in which a company deploys its own staff to try to break a tool they're building to eventually prevent harm.

But in practice, red-teaming is a "completely undefined category," that doesn't imply any specific workflow or process, Lipton, previously an AI researcher at Amazon and a current associate professor at Carnegie Mellon told me. "It's not clear what makes this a valid red team," he said. "It's not even clear at the outset what constitutes harm."

I also spoke with a handful of other founders who said they're keeping close tabs on AI regulations, however vague.

"We've got to be as strict as possible" on issues like privacy to raise the possibility of meeting federal benchmarks in the future, said Jennifer Hinkel, a health economist at the Data Economics Company, which is exploring using AI and large language models to make market predictions based on clinical outcomes. To stave off the risk of re-identifying patients from their data, the company is considering meeting rigorous European standards for privacy, she said. But compliance can be tricky and expensive, requiring regular audits and strict internal protocols — and not always within reach for startups.

"It's scary for tech startups to think about having to deploy that level of capital to research and development, and testing and safety…the lack of clarity around the regulation contributes to that [pressure] as much as the volume of regulation."

"We have to not just see where things are but also have a sense of where things are going," says Sandeep Dave, a Duke professor and founder of Data Driven Bioscience, a cancer diagnosis startup working with pharma companies and academic hospitals that participated in Y Combinator's incubator program in 2018.

As the company begins overlaying its original DNA and RNA assays with AI-tools, it's increasingly relying on its in-house regulatory experts to track new bulletins on AI from the White House, the Food and Drug Administration and other agencies to ensure it's on track to meet standards for clinical validation and transparency, Dave said.

"AI isn't the main focus of our test, but someday that piece will become quite important," he said. "For now our approach is to simply keep an eye on the evolving nature of things."

And the federal regulatory confusion is only confounded by the proliferation of AI bills at the state level, Manatt Health's Jared Augenstein told me.

"We're at a time when the federal government, the legislative and executive branches are wrapping up their regulatory activity but aren't yet at the point where they've issued clear concise comprehensive guidance, and so states are sort of stepping in," he said.

telehealth

Study: Abortion pills via telehealth are just as safe as in person

My STAT colleague Nalis Merelli reports on a finding that reproductive rights groups have touted for years: abortion pills dispensed over video chat are just as safe as in-person prescription. The latest study, in Nature Medicine, comes as the Supreme Court prepares to hear arguments on access to abortion medication next month. The study should affirm regulators' decision to ensure the medication is broadly available, lead authors said. Read more.

No comments