Regulation

Behold, a strategic plan

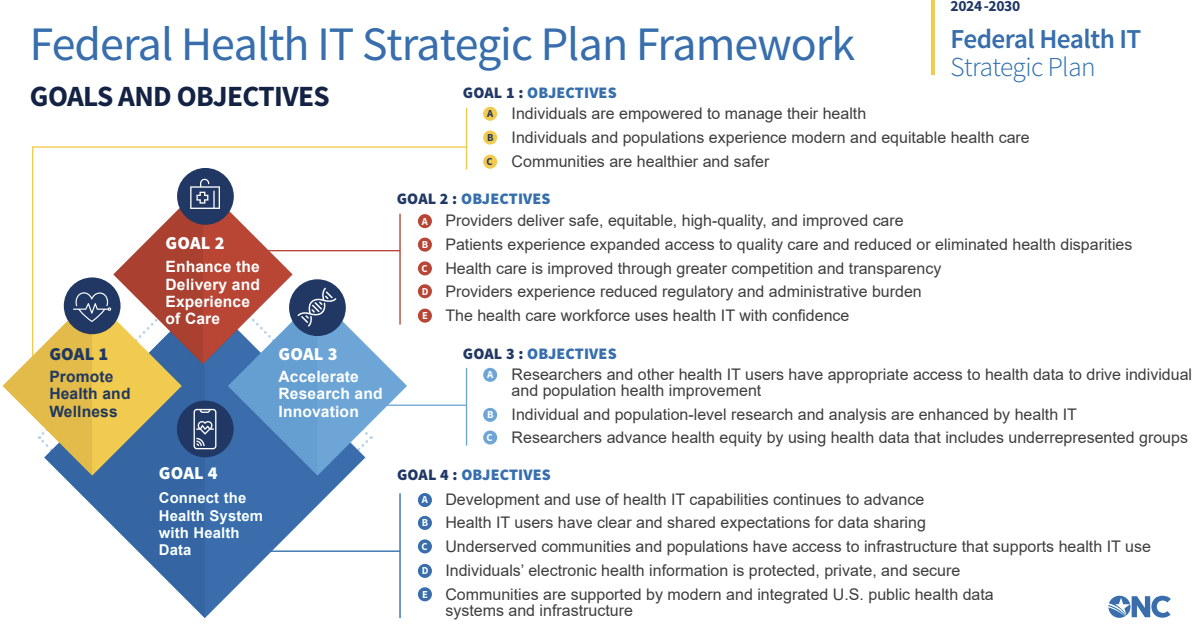

Yesterday, HHS' Office of the National Coordinator for Health Information Technology, released a draft of its Federal Health IT Strategic Plan running through 2030, and I have to admit that the very first thing I did was search the document for "artificial intelligence." (Three mentions.)

Processing this thing will not be that simple. As the slide above shows, the multi-pronged strategy is complex and defies brief summation — the first sentence of the agency's description of the work runs roughly fifty words, and I will not reprint it here. Suffice it to say that there is a lot about using tech to improve health outcomes and boost research and innovation. Several themes we talk about a lot here like algorithmic transparency, data interoperability, and mitigating health disparities show up repeatedly. Another topical priority will be providing "guidance and resources to help health care organizations integrate high-impact cybersecurity practices."

It will be interesting to watch the commentary on the strategic plan, and we'd be very curious to hear your thoughts if you have them.

Mental health

Virtual substance use clinic raises $58 million

Virtual substance use management clinic Pelago, which largely sells to employers like GE, has raised a new $58 million round from existing investors including Kinnevik AB, Octopus Ventures, and Y Combinator and a new investor, Eight Roads. Chief executive and cofounder Yusuf Sherwani told my colleague Mohana Ravindranath that Pelago plans to use the new funds to expand access to preventive programs, as opposed to treating existing conditions.

Recently there's been a movement among employers to consolidate disparate tech contracts and dialing back on new ones. But Sherwani said that employers see virtual substance use treatment as an investment in their workers' health and productivity, and as a means for lowering substance-use related medical costs, which might incur rehab stays or emergency department visits.

Still, selling a service to employers that workers may be wary of using due to stigma associated with substance use is a hurdle. Pelago doesn't share individual-level health data with employers, and assures users that any medical information is HIPAA-protected, but "you can do all that and people still will become somewhat skeptical," he said. But the company has found that users who've liked the program have been the most effective at getting other employees to sign up. "Virtual care is very conducive to people [for whom] going to rehab, or more expensive forms of treatment, isn't an option for them in the first place," he said.

Research Depression shows up differently in social media for Black and white users

A quick scan of posts on social media might lead one to conclude that all the rage, self-aggrandizement, and complaining must be a mirror of the mental health of the humans behind the avatars. But a new NIH-funded study in the Proceedings of the National Academy of Sciences suggests that modeling depression from what people say online may not be reliable across populations.

The study looked at Facebook posts from 868 participants who completed a standard survey for depression. In one analysis, the researchers followed from findings that suggests people with higher levels of depression are more likely to use first-person singular pronouns, like "I," "me," and "my." In the Facebook posts, these words were predictive of depression for white people in the study but not for Black participants. The language data were also used to create machine learning models for detecting depression, which performed well on white subsamples of the study population but poorly on the Black subsamples — even when they were trained on language exclusively from the Black population.

The findings, "raise concerns about the generalizability of previous computational language findings," the authors wrote.

Indeed, the work highlights the importance of developing and testing models with data from diverse populations before deploying them for clinical decision making. This is not an abstract problem about social media rants. Many companies already use AI to monitor therapy sessions for words and phrases that may suggest suicidal ideation. Depending on the use case, making sure a model works for everyone could be a matter of life and death.

No comments